Applications like virtual and augmented reality require a range of sensors, and the data from these sensors is linked together with sensor fusion.

Complex systems make copious use of data, especially with today’s embedded and cloud computing capabilities. What if your new product needs to use inputs from a large number of sensors to provide intended functionality? This is the central idea in sensor fusion. This concept is normally discussed in terms of automotive electronics as modern cars integrate data from multiple sensors, but other products can use the same concepts to provide a range of desired functions. If your next system will be using sensor fusion to integrate data from multiple sources, here are some components you’ll need to create a working system.

What is Sensor Fusion?

Sensor fusion is as its name suggests: data from a large number of sensors is collected and fed into a processor, which is then used for a range of applications. Some end applications that require sensor fusion include autonomous vehicles, robotics, control systems, industrial automation, AI, augmented reality, and much more. Even products like advanced medical monitors, smart appliances, and smart home systems will increasingly make use of sensor fusion.

So what makes sensor fusion different from, say, anything else involving reading and processing sensor data? It’s all a matter of scale and how the data is used. Your typical data acquisition system may only use a small number of sensors for different tasks, and different sensor inputs may not always be used together to make semi-autonomous decisions. Sensor fusion is both an embedded programming idea and a hardware idea in that the embedded software processes much more data and uses it to make more complex decisions.

If this sounds something like machine learning or AI, it’s not too far off the mark. AI/ML models can be constructed to accommodate different data structures as well as data from a range of sources for a single inference task. They are basically data-type and data-structure agnostic, as are other systems with multiple data streams and that don’t use ML models for decision making.

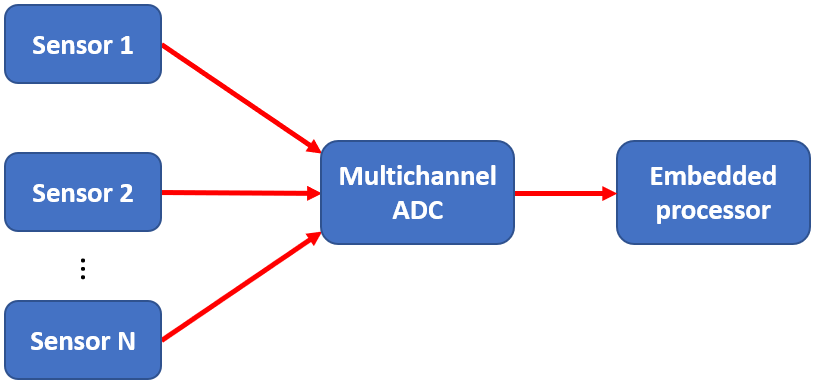

This block diagram shows the high level application involved in sensor fusion. Note that the ADC stage may be integrated into the embedded processor. If you like, processing could even happen on an external device or on the cloud.

The main question for a designer becomes: how do I “fuse” and process multiple streams of data from sensors? This is as much a software engineering question as it is a hardware design question. We won’t address the algorithmic side here as this is still an area of active development among computer scientists and software engineers. On the hardware side, there is a broad range of components that are needed to enable data collection and processing as part of sensor fusion.

Components for Sensor Fusion Systems

The exact set of components you need for sensor fusion depends in part on the application area:

Small devices like wearables might need smaller components to keep enclosure size small. Consider integrated components or smaller SMD components.

Highly specific systems like autonomous vehicles have looser form factor restrictions. Chipmakers may begin releasing specialty components to aid sensor fusion in newer automobiles.

Other systems with non-specific or modular form factors give designers freedom to select components, and SoCs may not be developed for use in these systems.

Sensor fusion all starts with harvesting analog data before feeding it into an embedded processor.

Multichannel ADCs

Going the route of a multichannel ADC for sensor fusion provides a convenient way to lump multiple signal inputs into a single package. Multichannel ADCs used in sensor fusion generally don’t need to have an extremely high sampling rate; delta-sigma ADCs with ~Megasamples per second rates will provide accurate signal acquisition into the ultrasound range, which encompasses a broad range of analog sensors.

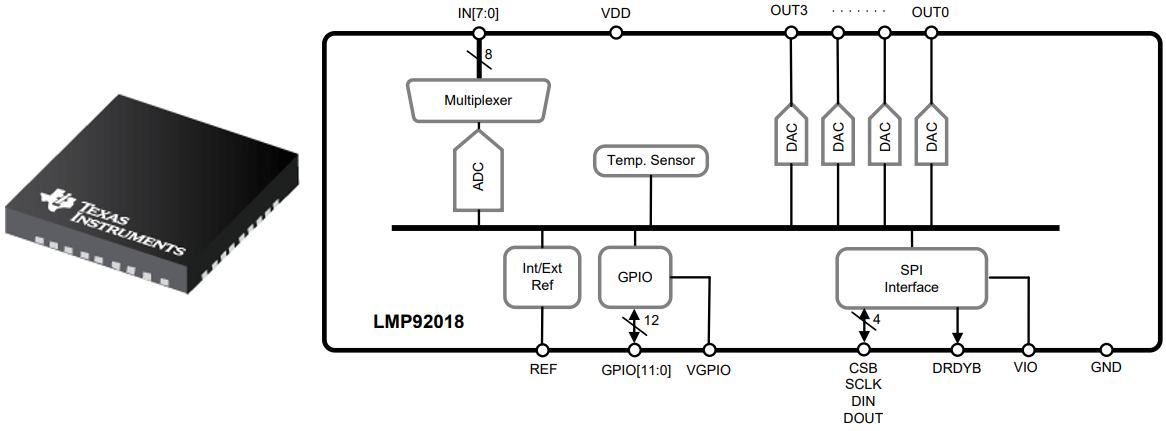

The LMP92018 multichannel ADC from Texas Instruments includes 8 simultaneous inputs with serial output. It also includes a 4-channel DACs for interfacing with other analog components. [Source: LMP92018 datasheet]

Note that ADCs can output in serial or in parallel, and they can include other features like programmable gain or input filtering. The Texas Instruments LMP92018 shown above is one example of a multichannel ADC that also includes 4 DACs with GPIO and SPI interfaces. This type of component is ideal for sensor fusion as it provides high sample rate and optional connection for an external reference voltage.

Application-specific Processing

After sensor data is gathered with ADCs, some of your inputs may require subsequent application-specific processing, either in a small MCU or with an ASIC. As an example, DSP components can be used to perform some signal conditioning tasks that may be required in specific application domains (computer vision from video data is a prime example). If an ASIC doesn’t provide the DSP steps you need, you can implement these with a general-purpose processor.

General-purpose Processing

This is where your application will be implemented and executed. Something as simple as a small MCU or FPGA could be used to receive inputs from a serial or parallel multichannel ADC, or from DSP ICs. Fusion from a larger number of sensors in parallel will require greater processing speed, on-board memory, bit depth, and I/Os to interface with your other components.

If there is an SoC that integrates multiple processing and communication functions into a single package, there’s no harm in using this component as the processor for sensor fusion. Not all of these specialized components include multiple ADC channels for sensor fusion, and those that do may include other features that are unnecessary for your application, which increases the component price point.

The STM32F373 MCU from STMicroelectronics is a standard platform for providing general-purpose processing power in sensor fusion.

For extreme computing workloads, such as in embedded AI, you’ll have to go the GPU route for processing power until chipmakers develop low-power ASICs for implementing AI models. NVIDIA arguably corners the market in this area thanks to the Jetson platform, but these systems are still limited in terms of sensor fusion. This may require a multichannel ADC and the main board, depending on the number of sensors you’re working with.

Finding the Components You Need for Sensor Fusion

So far, we’ve looked at an array of different components for sensor fusion. These components are readily available and can be used to develop proofs of concept, evaluation modules for specialty components, measurement and acquisition equipment, and much more. For more specialized products, like AI-enabled IoT products, chipmakers are developing a range of specialty SoCs that integrate many or all of the components seen here into a single chip. These more advanced components for sensor fusion tasks are still in development, and they may not provide the channel number, sampling rate, or gain you need for your system.

No matter what components you need for sensor fusion, you can find the parts you need with the right electronics search engine. When you need to find amplifiers, multichannel ADCs, and other components for your next system, Octopart provides a complete solution for component selection and supply chain management. The advanced filtration features will help you select exactly the components you need. Take a look at our integrated circuits page to get started on your search for the components you need.

Stay up-to-date with our latest articles by signing up for our newsletter.