AI, machine learning, IoT, edge computing… the list of buzzwords in the embedded hardware and software communities is long, but these stand out as they’ve become more common beyond developers. The progress in general purpose embedded computing over the past ~10 years is impressive and has reached the point where I can deploy one of my company’s blockchain nodes in my back pocket. General purpose computing has certainly come a long way and has transformed modern life.

What about embedded AI? If you look at advances in computing platforms for embedded AI, it has certainly lagged behind general-purpose embedded computing. Over the recent past, embedded AI was not really embedded and relied heavily on the cloud. A new class of ASICs is set to change the dynamic of embedded AI, and hardware designers should prepare themselves to build systems around these new components. Here’s what’s coming up in the world of embedded AI and how new embedded systems can take advantage of these changes.

From the Cloud to the Edge

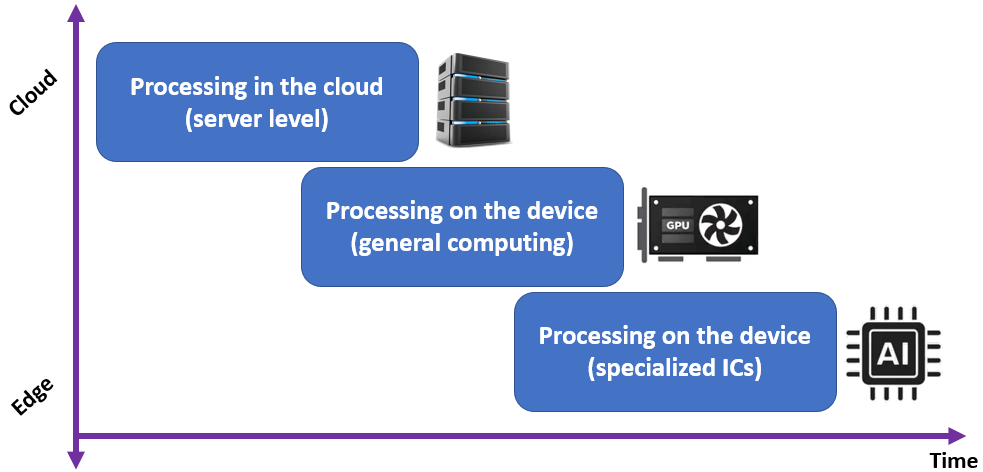

The dream in embedded AI is power-efficient computation at the edge with specialized hardware. The AI models on these devices need to be trainable, either at the device level or in the cloud, which would then require transmitting the trained model back to the edge. Looking over the recent past and how the AI landscape has evolved, we’ve seen a slow offloading of computing capabilities from the cloud to the edge.

Much of this is aided by cheaper, more powerful embedded computing platforms. Something like a Raspberry Pi or BeagleBone board is powerful enough to provide numerical prediction or simple classification (e.g., images, text, or audio) at the device level with pre-trained models. However, training at the device level with these platforms takes a significant amount of time, thus training would best be performed in the cloud. Newer AI-specific hardware platforms are trying to fill the gap and have been successful in speeding up computation and enabling more advanced tasks. An example is combined object detection, facial recognition, and image classification from video data, which enables applications in security and automotive, among others.

Progress of embedded AI from the cloud to the edge.

Google and NVIDIA have been in something of an arms race recently to release hardware platforms that are specialized for embedded AI applications. Google’s TensorFlow processing unit (TPU) was released as part of the Coral hardware platform; this platform is specialized for running TensorFlow models on the device. This puts it closer to a true application-specific embedded AI platform.

NVIDIA’s Jetson platform still resembles a general purpose computing platform in that it uses GPUs, but the firmware is specialized for AI computations on the device. Currently, there are four AI-specialized options available from NVIDIA that can be deployed in embedded applications. These NVIDIA products still consume relatively large power and generate extreme heat as they are built on legacy GPU architecture, which limits their usefulness in smaller IoT products.

Embedded AI Bottlenecks: Watch for AI-Specialized ICs

The bottleneck in embedded AI is not a processing bottleneck, it’s a size and heat bottleneck. The general-purpose processing power exists, and GPU/TPU products have been relegated to general-purpose embedded AI. Think Amazon Alexa; you don’t need an application-specific processor for embedded AI tasks as there are many other functions the device needs to perform. Furthermore, it receives constant power from the wall outlet and is designed to be constantly connected to the internet. The range of tasks to be performed by this class of embedded systems is broad enough that specialized ICs aren’t necessary.

Newer IoT products in highly specific applications and functions need something new: an AI-specialized IC. When I refer to an “AI-specialized IC,” I’m not referring to a GPU or TPU. Instead, I’m referring to an IC with specialized hardware architecture for running specific types of AI models with lower power consumption and few to no peripherals.

Fabless IC startups are stepping up to fill in the gap with new IP that can be implemented in other products, or with standalone ICs that provide highly application-specific embedded AI capabilities. One company I’m working with is set to release just such a product to target 5G-IoT, robotics, Industry 4.0, and other areas where embedded AI is expected to dominate.

If you’re building new IoT products, robotics, automotive products, or other systems that need embedded AI capabilities, watch out for newer AI-specialized ICs with some of the following features:

- On-device training: This aspect of embedded AI still relies on cloud computing power, but the best AI-specialized ICs will allow on-device training, e.g., in supervised learning applications.

- High-speed interfaces: Obviously, any AI-specialized IC needs to interface with other components on a board, and it will use some high speed interface. I2C and SPI are ideal interfaces, although I would expect other high speed interfaces to be used to connect with computer peripherals.

- Optimization for specific AI models: AI/ML models involve redundant matrix calculations and optimization steps, and the hardware architecture will be designed to execute the algorithms involved in different AI/ML models. Low power consumption: Getting down to sub-mW power levels for AI calculations is critical for persistent on-device training, classification, and prediction in data-heavy AI applications. An optimized architecture can help designers overcome the heat bottleneck, which also allows the overall size of a new product to be reduced.

Full Circle: Bringing it Back to the Data Center

I’d expect new specialized ICs and IP cores to be widely released in the next couple years. This shift away from general-purpose computing to application-specific hardware is what will allow more advanced embedded AI applications. OEMs will be able to produce smaller devices with greater computational efficiency than could be provided by general-purpose MCUs.

I’ve been talking about embedded AI in terms of the edge level, but AI-specialized ICs can easily filter back up the network hierarchy to the data center level. Offloading these tasks from general-purpose processors and onto an ASIC will reduce overall power consumption at the data center level.

The electronics landscape can change quickly, and embedded AI is no exception. Whether you’re looking for the newest embedded AI ASICs or any other component for a new product, Octopart will be here to help you find the components you need.

Stay up-to-date with our latest articles by signing up for our newsletter.